Video compression is a process to reduce the size of video files to the point where they can fit on DVDs or be streamed over the internet. Compression, on the transmitting end, and Decoding, on the receiving end is done using Codecs. The word Codec is actually short for enCoder/Decoder. There are five parts to the entire setup:

- the incoming signal – in this case video

- the encoding process, where we make the information ‘fit’ whatever we need it to fit

- the transmission path, where we’re getting from the transmitter to the receiver

- the decoder, where we’re doing our very best to take the encoded information to restore the incoming video signal as closely as we can

- the output signal – that is hopefully the same (in reality an approximation) as the input signal

As you’ll see in an upcoming article, you can’t store or transmit high definition or 4k video in uncompressed form without some expensive equipment, and you certainly cannot ‘stream’ uncompressed video over the internet from Netflix to your home. In fact – you can’t even store a movie onto a DVD without doing some seriously fancy compression – there simply isn’t enough room!

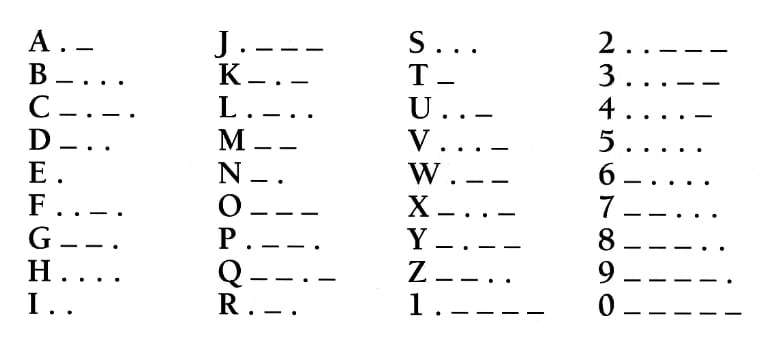

Encoding information is something you’d probably think of as something highly technical – but reality is that encoding and decoding of information is done in a lot of places for a lot of reasons.

Here’s an interesting example that you probably know about. Encoding text using Morse Code. Why? Well – if you can only transmit by turning the transmitter on or off, you have to do something to translate our letters into something that works with the given transmission channel. Here’s how we do it. We encode letters into short bursts (dots) and long bursts (dashes), and now you can figure out a way to transmit text:

Another one you’d not think of is music. A rather older problem, where there was no way to take music from one place to another. No iPod to take to work to listen to some good tracks – and so here’s a way to encode music. It’s really cool, because you can take it on a ship to a foreign land (think ‘transmission path’), and provided you have someone with an instrument who can read this sheet music (the decoder), you can go somewhere else, and listen to your favorite music. Yeah, it’s a bit of an ordeal compared to taking your phone – but once upon a time, that was the only way this could be done!

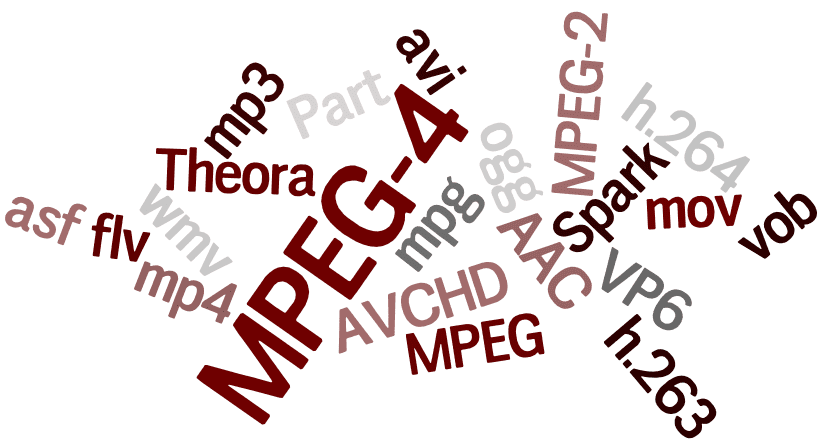

There are a large number of different Codecs and compression algorithms for video (and audio), available mostly as software solutions of some sort that take care of this compression and decoding ‘on the fly’, that is in real time. Some are better at one task, such as maybe representing colors, at the cost of being not so good at fast motion. Others might be good at fast motion, at the cost of image resolution, or accuracy of colors. They’re all compromise solutions, as they aim to take a whole lot of information and somehow compress it to fit a disk or data pathway.

Most of them work on the principle of encoding and sending changes between adjacent images only, although there obviously also a lot of other methods and techniques that come into play. Imagine you have two adjacent frames of a video that contain exactly the same image – a house, maybe, with the videographer not moving the camera from frame to frame. The first frame needs to be transmitted somehow, but if we assume that the house is mostly the same color, instead of transmitting each pixel we could tell the encoder to just transmit the color value for a large part of the house, and tell it where the boundaries of that color are. We’d save a huge amount of data that really doesn’t need to be transmitted. Same for the blue sky, or a piece of tarmac road – hey, the sky is blue, right, so why transmit each individual pixel. Might as well tell it where the sky is, and give it a value for the blue.

Here’s a frame sequence that we will imagine needs to be encoded. Let’s start with the first frame. Here’s an ordinary street scene. Some tarmac, houses, and some trees.

We will assume that the camera didn’t move, so the second frame will be the same as the first frame – so why transmit the second frame at all? We’ll just tell the other end to repeat the same image.

Here’s the third frame.

Have a close look. The third frame of this video has a car driving through the cross roads – so why not ONLY encode and transmit the part of the image where the car is – the rest of the image is still just the house, so no point in retransmitting all that video.

Now – we really saved some time there! The rest of the image stayed the same, so you can imagine how much it helped to only transmit that tiny part of the image with an X and Y coordinate to tell it where to put it.

Let’s have a look at the next frame.

In the next frame, the car is elsewhere in the image, so you transmit the bit of the image where the car was before, to ‘repair’ its background (in this case a bit of tarmac that’s pretty well all the same color, so we’ll tell the receiver to get out a gray crayon and just overpaint that bit of the picture), and then you take the image of the car and you overlay it elsewhere on the image. In fact – we’d probably get away by telling it to take the same picture of the car we sent last time, and just have it move that car to its new position. That was easy!

You can see how such an encoder might be able to compress the video – basically, instead of transmitting 5 frames of video, we could transmit 1 frame (having ‘compressed’ the data by not even transmitting all of the individual pixels), followed by a picture of a car, and that of a background that has to be set back to where it was before when the car moved. We’ve done an enormous compression of the data!

OK, now let’s imagine we’re panning the scene, to the right.

Instead of sending an entirely new frame – why not tell the encoder to just encode the fact that the entire picture has moved, and where it has moved to, so that we only need to fill in the strip of the image that is new. Hey, stick this bit on the right side of the previous image, and you’re done. Now that was easy, and quite efficient!

We’ve now transmitted an entire video by really only transmitting one frame (and we cleverly compressed that by not sending all of it but instead doing color banding), and adding some changes here and there.

These are just SOME of the techniques that Codecs use to transfer information. Obviously the result is not perfect. There’s a whole load of smoke and mirrors going on here, and Codecs often depends on your eyes not being able to catch the issues. If there’s a problem with a single frame of video, and we’re talking about the TV in your living room, it’s quite likely that you’ll see just what you’re intended to see: you never saw any detail in the car as it moved. You never saw that the right side of the image during that pan really didn’t look that good for a frame or so, and that it took a couple of frames to get the quality of the image back to what it should be.

I’m sure you’ve seen ‘color-banding’ where the sky looks like a patch work of different blues, rather than a nice gradient. Sometimes you’ll see a few frames where the image of your TV looks like a whole bunch of ugly looking squares – the image is KIND of there, but it’s not good.

All that happens when the data is compressed a little too much (it often happens when there’s a glitch and the transmission path has a brief problem), and the encoding process doesn’t encode ENOUGH of the different color samples and borders to make up that patch work. It’s a bit like you’re looking at the sky, and you’ve only got 4 different blue pens to make a picture of it. Not ideal, but on the whole you’ll get the idea.

Basically, Codecs are essential in order to fit video through restricted size data paths like the internet (think Netflix and the likes, and imagine trying to poke a house through a hosepipe to get an idea of the scale of the problem), and to allow it to be stored onto devices like hard disks, and for that matter your computer and your iPad. You’d not be happy to have one movie take up all the storage on your computer or tablet.

Next time, we’ll go into some more depth on encoding and decoding, and we’ll discuss how MPG files work. Then we’ll also do an article to discuss data rates and talk about compressed versus uncompressed video. Remember – these types of encoding mechanisms work – but in reality, you’re of course not getting the data in uncompressed form, so something, somewhere has to give! For some applications, you can get away with this – your kids watching Frozen in the car, you watching a movie at home, you’ll probably be fine with it. Having a corporate presentation to critical clients on a 16’ wide video wall is, of course, another story altogether.